You still need to know things.

This post is (sorta?) a follow up to a post I made last october, titled Generalist Musings.

It’s a pretty brief post, but a concise summary is that I felt like the current state of LLMs can outperform generalists, but underperforms specialists, meaning that specialization is very important, which is very bad for me because I am a generalist :(

I’m coming at it from the other end now — last week, I tried my hand at designing a PCB for an electronic wearables project. I’ve only worked on PCB design once in the past, and I haven’t really studied electrical engineering or other principles so extensively. So I’d definitely consider myself a beginner (bad). This is different from being at a generalist level (moderate) at a skill, or a specialist (good).

Because I know that I’m bad at PCB design, and I know that AI is generally alright at things, I figured I’d try my hand at various AI software to see if I could use them to kickstart the PCB design process. Unfortunately the experience was awful. I couldn’t get anything good out of it. Or at least something I’d feel comfortable printing out and sending off.

So I thought of a couple reasons why this might be the case:

- LLMs suck :)

- PCB design is a hard problem that AI is worse at

- Beginners suck at using AI to solve problems.

I think we’re at a stage where I can get some sort of broad agreement that 1. isn’t quite true.

The second point, that PCB design is a hard problem is pretty believable too. Automating PCB design is a very complicated process, and something that’s been worked on for a while. And it’s hardware, which is fundamentally a different problem than the main usage LLMs (and what I associate them with being strong at is), which is for coding purposes.

Hardware presents the challenge of being not as ubiquitous in the training data, being a problem that’s difficult to iterate upon to catch bugs and find mistakes (feedback is difficult), and also not being digitally encoded very well. Furthermore, iterating and making a mistake is a lot more expensive, PCBs cost money and time to ship, hitting a compile error is free. What’s worse is if a broken hardware design has a slight bug that makes it past QC, such as the Intel Pentium FDIV bug, which has a lot more implications than shipping a bad (software code) design to prod.

However, something that this got me thinking about was what would happen if we combined concepts from Programming Languages to be used when describing PCB designs? It might allow for a more powerful compiler/linter to catch bugs. This would make PCB and hardware design an easier problem to work with. It seems there exists Google’s PCBDL, but the GitHub repository has been marked for archiving. I’m not good at either PL/Compiler Design/PCB design, but I know tools like OpenSCAD exist, and wondering if some sort of formal syntax and ruleset would reduce PCB design to an easier problem. I think PCB design becoming a problem that AI will eventually be able to solve is quite plausible. I’m not sure if we’ll ever reach a point where PCB design can be “vibe-coded” in the sense that people seem to see programming headed though, simply becauase mistakes are still going to be more expensive.

Upon reflecting upon some of my other experiences with cybersecurity Capture-The-Flag(CTF) competitions (where LLM usage is permitted, and common), though, I’m inclined towards the third point as also being a potential reason. I’d consider myself medium-good on domain specific knowledge for this. For CTFs there’ll be problems in competitions (many now, honestly) where I’m able to solve entirely through a series of prompts to LLMs. But oftentimes I’ll be suruprised to see that there are only a couple (single-digit) amount of solves on that problem, when there’s normally a thousand or so teams competing, and LLM usage is not disallowed. So I think having domain specific knowledge is very helpful, and that as a generalist, being at a medium skill level allows us to be able to actually leverage LLMs as tools for a variety of tasks, while a specialist can only use it to accelerate their own specific workload.

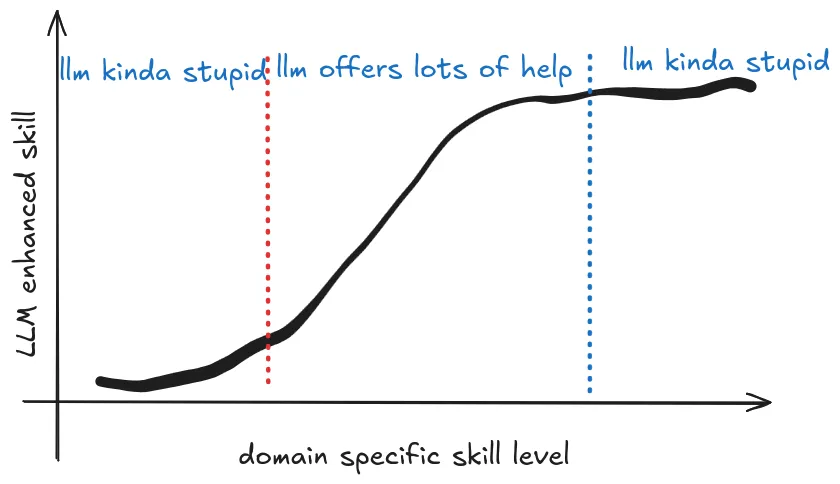

I see this sometimes in programming too, when people new to programming often aren’t able to leverage AI tools properly because of the lack of a proper dev environment, and lacking the common sense to fix a few software mistakes. I think it’d be interesting to see a plot, comparing domain specific skills and productivity increase after using an LLM. I’d imagine it’d look something like this:

Either way, it’s back to the basics for me. One more skill to add to my “generalist” arsenal. Especially happy to be working on learning such skills—because it’s clearly not something within my capability right now (AI use-enabled or not).

Side/end note: atopile seems pretty cool, discovered this after typing the post and being a bit more curious and googling around. Might try it out and update this post or post a new one. But I still don’t think it’s likely to make me competent.